As recently mentioned I’ve changed the website’s theme. Doing so, I realized my deployment method, which solely relied on Gitlab CI/CD and Helm, was as simple as it was unsatisfactory: I had to give access to my cluster to Gitlab (although it’s not too dire with proper RBAC management) and some other minor grievances.

Since I’ve been using a ArgoCD more and more lately, I figured: why not make it so Gitlab only handles the “build” and packaging part of things, and let Argo do the rest? As an added bonus it GREATLY complexifies the whole thing, what’s not to love?

But yeah, while a Gitlab Pages deployment would have been way, way easier and smoother (and I strongly recommend it over what I’m about to describe if it fits your use-case), it only works for Gitlab-hosted web applications, which restricts the scope of applicability greatly. This deployment pattern however should work with any and all application that can be built using Gitlab CI/CD and deployed by ArgoCD on a Kubernetes cluster.

If you’re reading this on www.northamp.fr, it’s likely that it worked :)

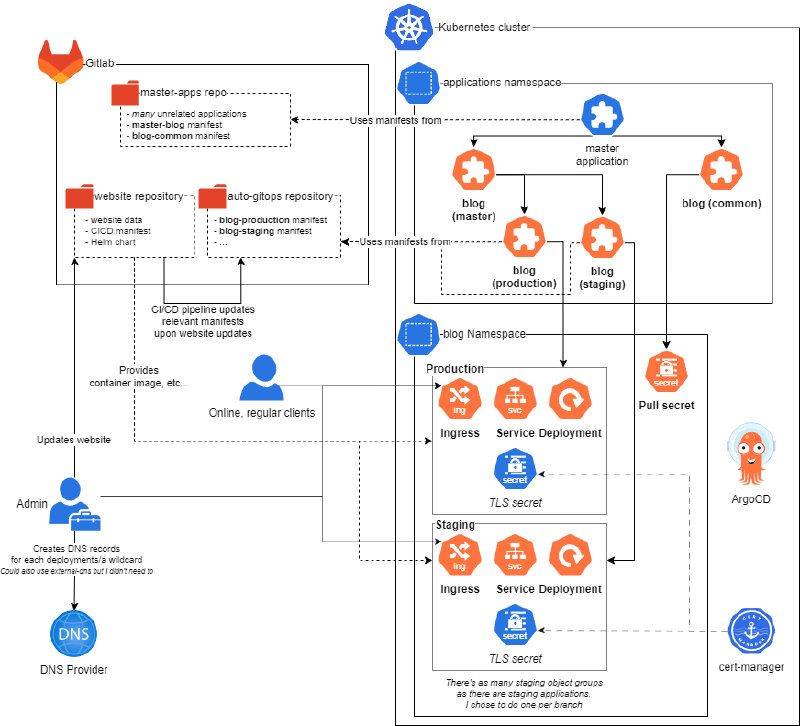

Diagram #

In a nutshell, the main ideas are:

- Rely on Gitlab to store

- Website data

- Containers & Helm chart

- I haven’t bothered properly publishing the latter for now, it lives in the same repo as the blog and that’s fine by me

- ArgoCD manifests (GitOps!)

- … and rely on Gitlab CI/CD for

- Container builds

- ArgoCD manifest updates

- … then on ArgoCD by extensively (ab)using application of applications pattern

Gitlab (CI/CD) is only used for GitOps and application builds, while ArgoCD handles all the CD side of things, giving the possibility to use both to their fullest extent with Gitlab environments, Argo’s UI, etc..!

Setting it up! #

This won’t be a textbook procedure, and will assume that the reader has a good grasp on Kubernetes already. It’ll also be opinionated towards certain solutions (i.e. I use Traefik as ingress, Let’s Encrypt as ) and those are assumed to be set up and ready for use. Basically, you may have to extensively tailor the solution to your own needs (but feel free to contact me if necessary :).

Repositories #

As shown in the diagram, I rely on three different repos:

- The website’s own repo

- A manually managed GitOps repo

- A CI/CD-managed GitOps repo

The last two could well be just one repo, but I chose to separate them as I don’t like committing to repositories in the context of a Gitlab CI to begin with, and even less when I frequently, manually make modifications to it myself (those applications aren’t gonna update themselves!).

Website #

As you’ve probably understood by now, the guinea pig for this deployment pattern has been this very website, my blog. It’s a simple Hugo website, built using Gitlab CI/CD, containerized with Kaniko, and pushed to Gitlab registry. Its repository structure is something like:

website/

|-> .gitlab-ci.yml

|-> Dockerfile

|-> helm/

|-> <chart that deploys the website>

|-> <miscellaneous Hugo dirs and configs>I won’t post the entire website’s code directory as it’d be pointless; what’s more interesting is the Gitlab CI manifest though:

variables:

GIT_SUBMODULE_STRATEGY: recursive

GIT_SUBMODULE_DEPTH: 1

stages:

- build

- dockerize

- deploy

.build:

stage: build

image: hugomods/hugo

script:

- hugo version

- echo "Building Hugo website with baseURL ${BASEURL}"

- hugo -d public_html --baseURL ${BASEURL}

- echo -n "BASEURL=${BASEURL}" > url.env

artifacts:

paths:

- public_html

reports:

dotenv: url.env

expire_in: 1 day

build staging:

extends: .build

variables:

BASEURL: https://${CI_COMMIT_REF_NAME}.${STAGING_URL}/

rules:

- if: $CI_COMMIT_BRANCH != $CI_DEFAULT_BRANCH

when: on_success

build production:

extends: .build

variables:

BASEURL: https://${PRODUCTION_URL}/

rules:

- if: $CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH

when: on_success

dockerize:

stage: dockerize

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

script:

- mkdir -p /kaniko/.docker

- echo "{\"auths\":{\"${CI_REGISTRY}\":{\"username\":\"${CI_REGISTRY_USER}\",\"password\":\"${CI_REGISTRY_PASSWORD}\"}}}" > /kaniko/.docker/config.json

- /kaniko/executor --context ${CI_PROJECT_DIR} --dockerfile ${CI_PROJECT_DIR}/Dockerfile --destination ${CI_REGISTRY_IMAGE}:${CI_COMMIT_REF_NAME}-${CI_PIPELINE_IID}

argocd:create manifest:

stage: deploy

image: alpine:latest

variables:

GIT_STRATEGY: none

before_script:

- apk add git

- git config --global user.email "noreply@${CI_SERVER_HOST}"

- git config --global user.name "ci-bot"

script:

- git clone https://oauth2:$ACCESS_TOKEN@a.gitlab.instance.net/auto-gitops-gitlab-repo.git

- cd auto-gitops

- mkdir -p applications/blog

- |

cat > applications/blog/${CI_COMMIT_BRANCH}.yaml << EOF

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: blog-${CI_COMMIT_BRANCH}

namespace: 'argocd'

spec:

destination:

namespace: 'blog'

server: 'https://kubernetes.default.svc'

finalizers:

- resources-finalizer.argocd.argoproj.io

source:

path: path/to/helm/chart

repoURL: 'https://a.gitlab.instance.net/website-repo.git'

targetRevision: ${CI_COMMIT_BRANCH}

helm:

parameters:

- name: fullnameOverride

value: blog-${CI_COMMIT_BRANCH}

- name: image.tag

value: ${CI_COMMIT_REF_NAME}-${CI_PIPELINE_IID}

- name: ingress.enabled

value: 'true'

- name: 'ingress.hosts[0].host'

value: $(basename ${BASEURL})

- name: 'ingress.hosts[0].paths[0].path'

value: /

- name: 'ingress.hosts[0].paths[0].pathType'

value: ImplementationSpecific

values: |-

imagePullSecrets:

- name: blog-registry-credentials

ingress:

annotations:

kubernetes.io/ingress.class: traefik

traefik.ingress.kubernetes.io/router.tls: "true"

cert-manager.io/cluster-issuer: letsencrypt-prod

tls:

- secretName: blog-${CI_COMMIT_BRANCH}-tls

hosts:

- $(basename ${BASEURL})

project: selfhost

syncPolicy:

automated:

prune: true

EOF

- git add .

- git commit -m "update application for blog on branch ${CI_COMMIT_BRANCH}"

- git push -o ci.skip https://oauth2:$ACCESS_TOKEN@a.gitlab.instance.net/auto-gitops-gitlab-repo.git --all

- echo "Done! Site should be deployed within 5 minutes to ${BASEURL}"

environment:

name: blog-$CI_COMMIT_REF_NAME

url: "https://${BASEURL}"

on_stop: "argocd:delete manifest"

argocd:delete manifest:

variables:

GIT_STRATEGY: none

stage: deploy

image: alpine:latest

before_script:

- apk add git

- git config --global user.email "noreply@${CI_SERVER_HOST}"

- git config --global user.name "ci-bot"

script:

- git clone https://oauth2:$ACCESS_TOKEN@a.gitlab.instance.net/auto-gitops-gitlab-repo.git

- cd auto-gitops

- rm applications/blog/${CI_COMMIT_BRANCH}.yaml

- git add .

- git commit -m "remove application for blog on branch ${CI_COMMIT_BRANCH}"

- git push -o ci.skip https://oauth2:$ACCESS_TOKEN@a.gitlab.instance.net/auto-gitops-gitlab-repo.git --all

- echo "Done! Site deployed on ${BASEURL} should be going down soon"

when: manual

environment:

name: blog-$CI_COMMIT_REF_NAME

action: stopImportant things here are:

- I’ve set up two different build jobs, one for the master branch (which is assumed to be production) and the other for, well, any other branch (which are assumed to be staging). This is because Hugo needs the

base_urlsetting properly set up upon building the website. Said URL is passed on to next jobs (through dotenv reports) for later usage - I’m using Kaniko to build the website, not much to say here except that the resulting tag contains the pipeline unique ID, to trigger new ArgoCD deployments as much as necessary (remember to enable container registry cleanup!)

The ArgoCD manifest generation job is the main star of the show here: it commits a new (or replacement) manifest that’s relevant to the build that just took place. That manifest uses a Helm chart contained within the repo that contains every basic templates necessary to deploy the website. When combined with the other repos I’ll talk about next, it lets ArgoCD pick up on every changes done within the website code, and, depending on the branch where said change happened, will make it deploy on the production URL or a staging one!

And to keep things clean, I’m using Gitlab environments to trigger a job that deletes the manifest when the branch is deleted (which makes Argo remove every resources, since auto-prune is on).

ACCESS_TOKEN containing an access token with read_repository and write_repository permissions on the auto-gitops-gitlab-repo for the last two jobs!

Manual GitOps repo #

That repository has existed for way longer than I’ve decided to change that blog’s deployment, and contains most of my service deployments, following the classic “app of apps” ArgoCD pattern. It’s structured like:

manual-gitops-gitlab-repo/

|-> README.md

|-> applications/

|-> application_example.yaml

|-> master-blog.yaml

|-> blog-common.yaml

|-> application_example/

|-> README.md # bit of documentation on the deployed app, could well be ported to the wiki but I'm lazy :)

|-> master-blog/

|-> README.md

|-> blog-common/

|-> pullsecret.yaml

|-> README.md

|-> applications.yaml # Contains the manifest for the app of apps ("master-apps") created on Argo - just archived hereI also won’t post the directory since it’s largely irrelevant, but the interesting applications are:

applications.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: master-apps

namespace: argocd

spec:

destination:

namespace: applications

server: 'https://a-kube-cluster'

project: whatever

source:

path: applications

repoURL: 'https://a.gitlab.instance.net/manual-gitops-gitlab-repo.git' # this repository!

targetRevision: HEAD

syncPolicy:

syncOptions:

- CreateNamespace=true

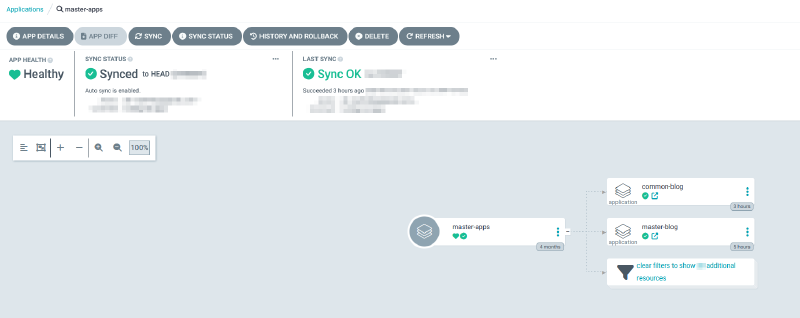

The fabled app of apps, there’s plenty of literature on the net about it. Moving on…

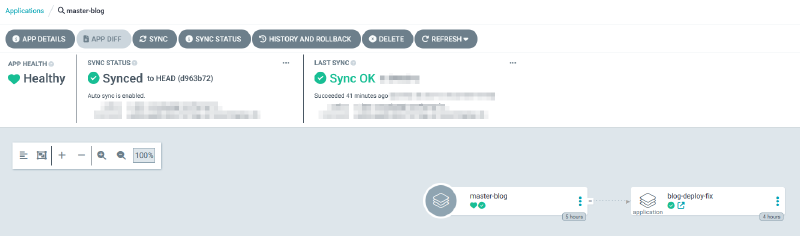

applications/master-blog.yaml:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: master-blog

namespace: argocd

spec:

project: whatever

source:

repoURL: 'https://a.gitlab.instance.net/auto-gitops-gitlab-repo.git' # the gitlab-ci managed repo!

path: applications/blog

targetRevision: HEAD

destination:

server: 'https://a-kube-cluster'

namespace: applications

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

This application picks up what goes on in the auto-gitops repo, I’ll get back to it later.

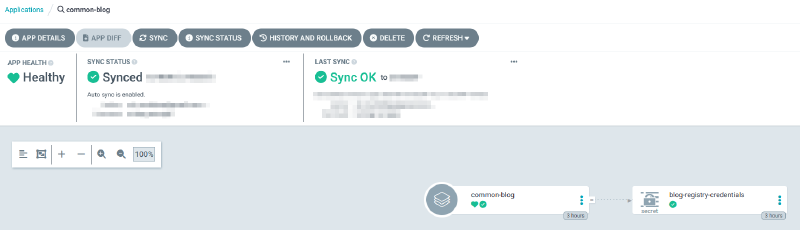

applications/blog-common.yaml:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: common-blog

namespace: argocd

spec:

project: whatever

source:

repoURL: 'https://a.gitlab.instance.net/manual-gitops-gitlab-repo.git' # this one!

path: common-blog

targetRevision: HEAD

destination:

server: 'https://a-kube-cluster'

namespace: blog

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=trueThis one however grabs manifests created in this very repository under the common-blog dir; I use it to create the pull secret used to download the images. It’s not lumped in the individual deployments (because it’d be pointless), nor created manually because I want everything to be managed by Argo. So yeah, all it contains is a single secret resource:

And with that setup, everything is working properly, save for one last task: create the auto-gitops repo!

Auto GitOps repo #

Since it’s managed with CI/CD, all there is to do is create the repo, create an Access Token able to read/write to it and give it to Argo and Gitlab CI.

I’ve added a README to it but it’s not even mandatory. While it’s empty until the first pipeline run that would generate a manifest, after a few branches and deployments it’d look like:

auto-gitops-gitlab-repo/

|-> README.md

|-> applications/

|-> blog/ #

|-> master.yaml # Default branch, lands on production URL. Generated by a CI job!

|-> example_branch.yaml # Staging branch, deploys on another domain as staging. Generated by a CI job!

|-> another_website/

|-> master.yaml

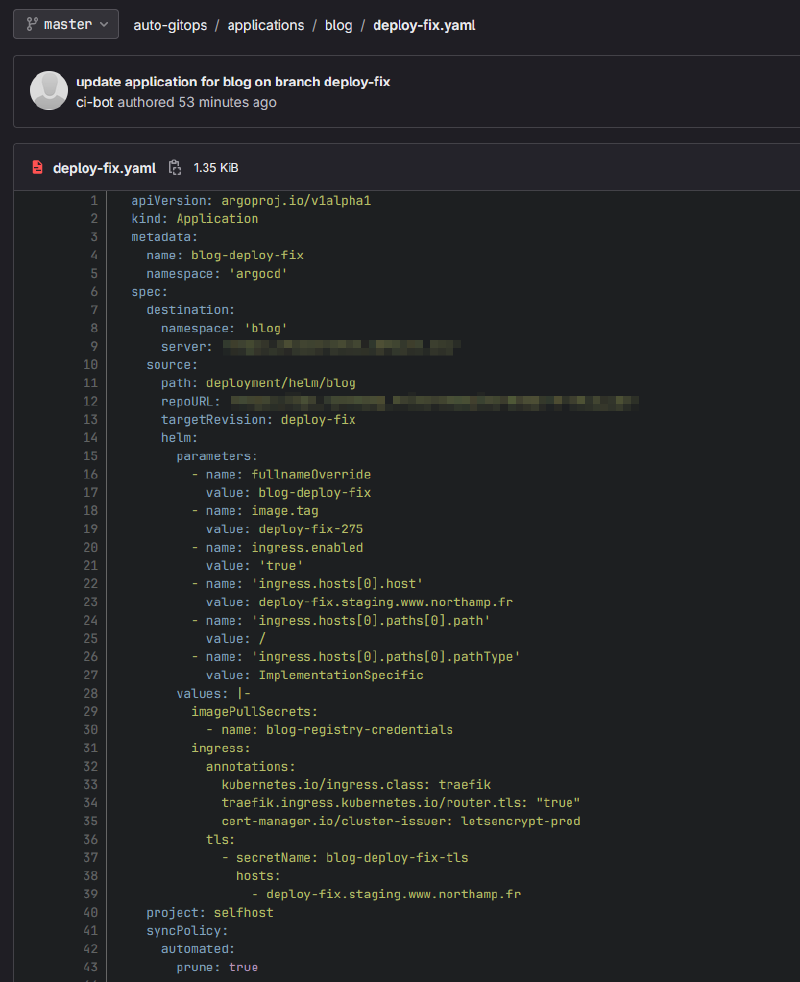

|-> example_branch.yamlThus, after running a pipeline, the following file would be generated:

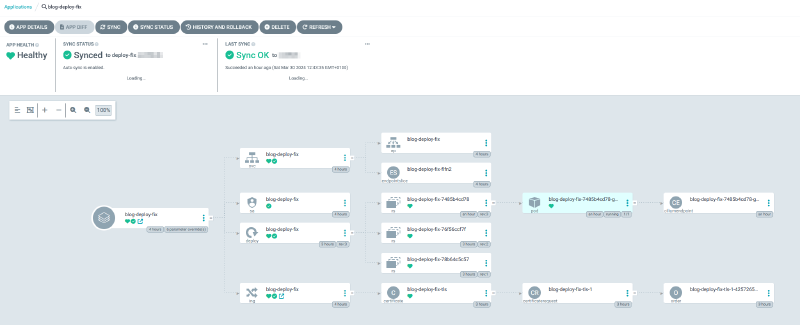

Which would be picked up and deployed by ArgoCD:

… And all is right with the world :)

Wrapping it up #

Not much more to be said, beyond the fact that it is again very convoluted and could likely be simplified; this automated deployment pattern however fits nearly every containerized Gitlab-hosted-Argo-deployed projects I can think of.

The main weak point I can envision is the manifest deletion job: if it runs before another job that creates the manifest anew, the deployment will live on forever til manually deleted from the auto-gitops repo.

If I notice more issues or fix some, I’ll update this article as necessary. I may also make a boilerplate group project to showcase it entirely, instead of letting the reader (you!) skim through the entire thing without seeing a concrete example.