(skip to the end for the actual solution)

Like any good story, it starts with a murder #

On this fine day of January, as I was toying with Blacklight Revive modules and attempting to implement mutators on a server, I noticed it was getting OOMKilled by the K8 orchestrator pretty much as soon as it started.

At first I figured it was my C# that was so terrible it leaked memory like an open tap, but it persisted even after rolling back my mildly scuffed module attempts.

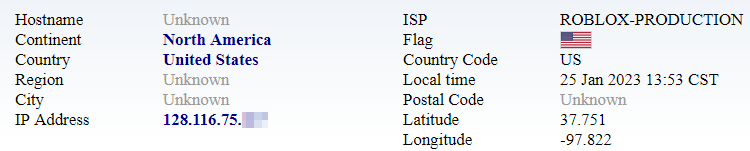

A short investigation later revealed the culprit of that particular instance was from across the pond according to geotools, and allegedly related to Roblox (of all things):

Now I don’t know what’s going on in that IP range (it’s just one of many, I didn’t bother keeping track beyond the first dozen IPs), but my hunch is that the actual evil lies in a botnet that, probably unwittingly, knocks out the blacklight server as it tries to connect to it (looking for a fellow zombie or something).

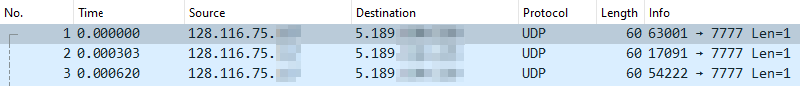

The odd thing is the sheer amount of packages sent in a short amount of time, which makes me doubt this theory. Doesn’t really matter in the end, so shrug.

This graph only factors in one attack from a single IP, represents what’s received by only one of the nodes, doesn’t contain blacklight’s replies (more on that in a second), and there were dozens of them per hour on the busiest days.

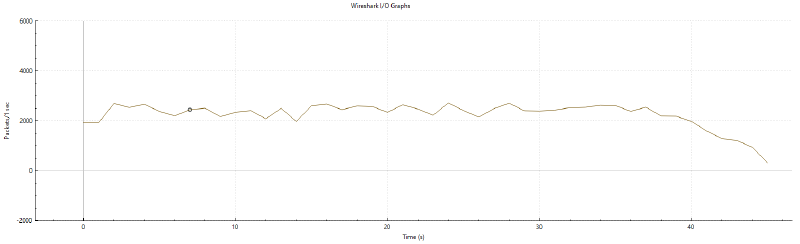

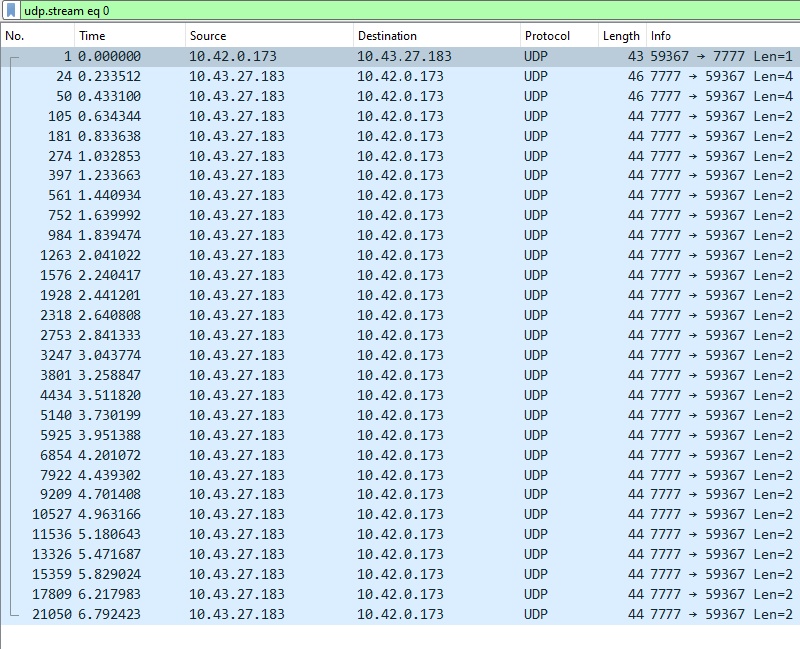

As you can tell, the game didn’t really like that, and nor did(/does) my infrastructure. Thankfully, each packets is just one single byte payload (always the same too: 0x3E).

But, the real kicker is that blacklight, for some very likely reasonable and easily explained reason, decides to reply to each of these connections - they have a different client port everytime, so I guess the server hopes it’s a new game client every time.

Moreover, it replies not just once, but 28 times, over the span of around ten seconds.

On top of that, memory usage shoots through the roof, which upsets Kubernetes, which pulls the plug on the Pod (as per my instructions), and we’re back to square one.

So, we’ve got hundreds of thousands of packets flowing in, and a service that very inconveniently replies to each of them. Ideally, the solution would simply be a firewall rule that drops this particular stupid packet, right? Well it was slightly more complicated than that.

Some words on my architecture #

The entire thing runs on a Kubernetes cluster spread across several VPS. The blacklight server can run on any of the nodes, which is a bit overkill (and is sort of the point, I’m using the cluster as a test bed) but allows pretty high availability (when it doesn’t get drowned in packets that is) since:

- The game files are available on a shared storage

- A load-balancer carried by each nodes exposes the game port

- The game’s HTTP API is exposed by an ingress, but it doesn’t really matter here

- The domain name given to people (blrevive.northamp.fr) resolves to each nodes that run said load-balancer

So any firewall rule I want to apply would have to be set on each nodes, not quite complicated in and of itself. However, as per the Kube distribution’s guidelines, I’m using iptables-legacy instead of nftables, despite the latter being the built-in firewall solution for my (GNU+)Linux OS.

The Iptables way #

The u32 module exists. That’s all I can say about it, I hate mathematics and playing with numbers, whether or not they’re base10.

I’ve tried lots of rules such as:

# doesn't work!

iptables -I INPUT 1 -m u32 --u32 "29&0xFF=0x3E" -p udp --dport 7777 -j LOG --log-prefix "possible-spam"But none worked, so I’ll leave it at that!

The Nftables way #

I discovered pretty recently that xt_u32 was being deprecated by RHEL 8 in favor of nftables’ “raw payload expression” rules (no complaints from me).

Digging into it a bit, it turns out it’s much easier to stomach than u32: it has similar way to point to the byte you want, but with the oh how so convenient feature of letting one start directly at the transport header (@th), so filtering on the payload of an UDP packet would presumably be as easy as:

# @th: transport header, so the UDP packet

# 64: ignore all the UDP mumbo jambo and get to the payload

# 8: get the first 8 bytes of the payload

# 0x74: compares the payload with 0x74 (I was sending "t" as a test, to block the floods I'd have used 0x3E instead)

nft add rule inet test mangletest udp dport 7777 @th,64,8 0x74 logProblem is, I’m stuck with IPTables on my servers as mentionned earlier, and while it usually does the job (and very well), it does not in that particular case.

So I got started onto… dockerizing nftables. While I’d have prefered stopping the flood directly from the nodes, it wasn’t a possibility, so I figured I’d do it after the packet hits the load-balancer. While it would be more comfortable, I did not want to give Blacklight’s pod CAP_NET_ADMIN (I’m still scared by the possibly absurd amount of vulnerabilities the server has) and set the nft rules there.

I tried DNAT-ing connections and dropping the offending packets, which was far from comfortable, but seemed to work! But it broke the game connections too, preventing legitimate clients from reaching the game (which may be due to my poor nftables rules).

I had already tried to use a nginx UDP reverse proxy for debugging, so I figured I’d use it again, adding the nftables rules on top (plus the admin network cap) but I wasn’t too thrilled with the idea, especially since I wasn’t sure how the game actually liked nginx as a middleman: it worked fine when I was playing with bots, but I didn’t really want to ring Blacklight Revive’s Discord for beta testers (it’s hard enough to find regular players as is!).

I was aware of Envoy and know that my favorite ingress (Traefik, five years+ and counting) also supports UDP proxying, both seeming like reasonable alternatives, then stumbled upon a solution put out by Google recently: Quilkin.

The Quilkin way #

Since it’s a relatively new arrival to the scene, I wasn’t aware Quilkin existed but I liked the concept of a reverse proxy rigged for gaming usage enough I figured I’d give it a spin. Then I noticed the capture filters in the docs. Oh my.

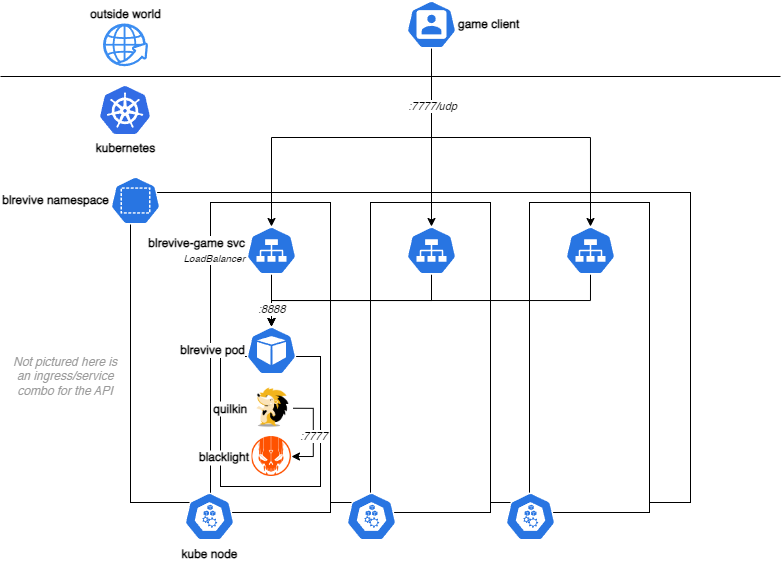

A hop and a skip later, I’ve got it running on my cluster in-between the load-balancer and the blrevive container:

Hastily thrown together diagram: Quilkin is set up as a sidecar to blacklight, and expects connections on port :8888 from the load-balancer to forward them to localhost:7777, where blacklight listens

I can connect to the game, excellent! I didn’t have a capture filter set at the time, and Quilkin started choking upon being hit by the thousand of packets when the bots attacked again:

{"timestamp":"2023-03-09T23:05:57.130650Z","level":"ERROR","fields":{"message":"Address already in use (os error 98)","kind":"address in use"},"target":"quilkin::proxy","filename":"src/proxy.rs"}Figured I’d sink in a dozen more minutes in creating a quick Capture filter (who needs sleep anyway), which took me about 20 minutes (counting in the ConfigMap logistics to mount the config inside Quilkin’s pod), then left it to its own devices.

Well, leaving it running for over a dozen hours and connecting to the metrics endpoint yields the following:

# HELP quilkin_packets_dropped_total Total number of dropped packets

# TYPE quilkin_packets_dropped_total counter

quilkin_packets_dropped_total{event="read",reason="quilkin.filters.match.v1alpha1.Match"} 32489426So I guess that works! Despite 32 MILLION of those stupid packets (adding up to a grand total of 30MB or so I suppose :), the pod hasn’t crashed, I can still connect to the game, and no other issues has arisen (so far).

For reference, here’s my current YAML spinning all these things up (mostly to demo Quilkin usage, check docker-blrevive for the latest BLRE specific version):

---

apiVersion: v1

kind: ConfigMap

metadata:

name: blrevive-config

namespace: blrevive

labels:

app: blrevive

data:

MARS_DEBUG: "True"

MARS_SERVER_EXE: "BLR.exe"

MARS_GAME_SERVERNAME: "MyNemSerb"

MARS_GAME_PLAYLIST: "KC"

MARS_GAME_NUMBOTS: "2"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: blrevive-serverutils-config

namespace: blrevive

labels:

app: blrevive

data:

server-utils.config: |

{

"hacks": {

"disableOnMatchIdle": 1

},

"mutators": {

"HeadshotsOnly": 0

},

"properties": {

"GameForceRespawnTime": 30.0,

"GameRespawnTime": 3.0,

"GameSpectatorSwitchDelayTime": 120.0,

"GoalScore": 3000,

"MaxIdleTime": 180.0,

"MinRequiredPlayersToStart": 1,

"NumEnemyVotesRequiredForKick": 4,

"NumFriendlyVotesRequiredForKick": 2,

"PlayerSearchTime": 30.0,

"RandomBotNames": [

"Frag Magnet",

"Spook",

"OOMKilled",

"Rakbhu",

"Server Fault",

"kernel panic",

"WINE_CXX_EXCEPTION",

"ACCESS_VIOLATION",

"CODE c0000005",

"Firestarter",

"Stainless Kill",

"Bomberman",

"Wireshark",

"A bot",

"A real player",

"A smooth criminal",

"This bot sponsored by",

"Contabot",

"Prometheus"

],

"TimeLimit": 10,

"VoteKickBanSeconds": 1200

}

}

---

apiVersion: v1

kind: ConfigMap

metadata:

name: quilkin-config

namespace: blrevive

labels:

app: blrevive

data:

quilkin.yaml: |

version: v1alpha1

admin:

address: "[::]:9091"

id: blrevive-proxy

port: 8888

clusters:

default:

localities:

- endpoints:

- address: 127.0.0.1:7777

filters:

- name: quilkin.filters.capture.v1alpha1.Capture

config:

metadataKey: blrevive/packet

prefix:

size: 1

remove: false

- name: quilkin.filters.match.v1alpha1.Match

config:

on_read:

metadataKey: blrevive/packet

branches:

- value: ">"

name: quilkin.filters.drop.v1alpha1.Drop

fallthrough:

name: quilkin.filters.pass.v1alpha1.Pass

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: blrevive

namespace: blrevive

labels:

app: blrevive

spec:

replicas: 1

selector:

matchLabels:

app: blrevive

template:

metadata:

labels:

app: blrevive

spec:

initContainers:

- name: server-utils-config

image: busybox

command: ['/bin/sh', '-c', 'cp /tmp/server_config.json /mnt/server_utils']

volumeMounts:

- name: blrevive-serverutils-dir

mountPath: /mnt/server_utils

- name: blrevive-serverutils-config

mountPath: /tmp/server_config.json

subPath: server_config.json

containers:

- name: blrevive

image: registry.gitlab.com/northamp/docker-blrevive:0.4.0-release

imagePullPolicy: Always

envFrom:

- configMapRef:

name: blrevive-config

# Wine debug messages

# env:

# - name: WINEDEBUG

# value: "warn+all,+loaddll"

ports:

- name: game

containerPort: 7777

protocol: UDP

- name: api

containerPort: 7778

protocol: TCP

resources:

requests:

memory: "1024M"

cpu: "0.25"

limits:

memory: "2048M"

cpu: "2"

volumeMounts:

- mountPath: /mnt/blacklightre/

name: blrevive-gamefiles

readOnly: true

- mountPath: /mnt/blacklightre/FoxGame/Logs

name: blrevive-logs

- mountPath: /mnt/blacklightre/FoxGame/Config/BLRevive/server_utils

name: blrevive-serverutils-dir

- name: quilkin

image: us-docker.pkg.dev/quilkin/release/quilkin:0.5.0

# If file configuration isn't used:

# args: ["proxy", "--port", "8888", "--to", "127.0.0.1:7777"]

args: ["proxy"]

ports:

- name: proxy

containerPort: 8888

protocol: UDP

livenessProbe:

httpGet:

path: /live

port: 9091

initialDelaySeconds: 3

periodSeconds: 2

resources:

requests:

memory: "128M"

cpu: "0.25"

limits:

memory: "512M"

cpu: "1"

volumeMounts:

- mountPath: /etc/quilkin/quilkin.yaml

subPath: quilkin.yaml

name: quilkin-config

readOnly: true

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

volumes:

- name: blrevive-gamefiles

persistentVolumeClaim:

claimName: blrevive-pv-claim

- name: blrevive-logs

emptyDir: {}

- name: blrevive-serverutils-dir

emptyDir: {}

- name: blrevive-serverutils-config

configMap:

name: blrevive-serverutils-config

items:

- key: server-utils.config

path: server_config.json

- name: quilkin-config

configMap:

name: quilkin-config

items:

- key: quilkin.yaml

path: quilkin.yaml

---

apiVersion: v1

kind: Service

metadata:

name: blrevive-game

namespace: blrevive

labels:

app: blrevive

spec:

type: LoadBalancer

ports:

- name: game

port: 7777

targetPort: proxy

protocol: UDP

selector:

app: blrevive

---

apiVersion: v1

kind: Service

metadata:

name: blrevive-api

namespace: blrevive

labels:

app: blrevive

spec:

ports:

- name: api

port: 80

targetPort: api

protocol: TCP

selector:

app: blrevive

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: blrevive-ingress-insecure

namespace: blrevive

annotations:

kubernetes.io/ingress.class: "traefik"

spec:

rules:

- host: blrevive.example.com

http:

paths:

- backend:

service:

name: blrevive-api

port:

number: 80

path: /

pathType: ImplementationSpecific

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: blrevive-ingress

namespace: blrevive

annotations:

kubernetes.io/ingress.class: "traefik"

traefik.ingress.kubernetes.io/router.tls: "true"

cert-manager.io/cluster-issuer: letsencrypt-prod

spec:

rules:

- host: blrevive.example.com

http:

paths:

- backend:

service:

name: blrevive-api

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- blrevive.example.com

secretName: blrevive-example-com-tlsFor now only two things remain:

- The Capture rule is a bit broad, it catches every packets starting with

>and can lead to unpleasant side-effects. The solution might simply be to use a regex instead (could even be as simple as/^>$/, ha) but I haven’t got time to test it yet. - The trial by fire, which implies having people to play with. Join BLRevive’s Discord!